Building an Open and Interoperable Voice Ecosystem

JarbasAl

OVOS Contributor

Building an Open and Interoperable Voice Ecosystem

Open Voice OS (OVOS) has always been about freedom and flexibility, giving users full control over their voice assistants and how they connect with the world. But freedom also brings a challenge: ensuring that all these independent components can understand and work with each other.

That’s where standards and interoperability come in.

Today, OVOS is doubling down on its commitment to protocol alignment, not by locking into a single ecosystem, but by speaking the languages of many. This post outlines where we are now and where we’re going, as OVOS evolves into a truly interoperable, protocol-aware voice platform. Much of this work is supported by the NGI0 Commons Fund, helping us build a more open, connected, and privacy-respecting voice ecosystem.

Why Standards Matter

Voice technology thrives when systems can communicate. Whether you're connecting a speech-to-text engine to a dialogue manager, or bridging a local agent to a cloud service, the key is clear, consistent interfaces.

Interoperability means:

- 🧩 Plug-and-play integration between tools, frameworks, and agents

- ⚙️ Reuse of existing infrastructure instead of reinventing the wheel

- 🔄 Resilience - the freedom to swap out components without breaking everything else

In short: standards keep the open in Open Voice OS.

Near-Future Work: MCP, UTCP, and A2A

A major focus for upcoming OVOS releases is protocol-level interoperability. Several new standards are being explored to allow OVOS to seamlessly connect with external AI ecosystems.

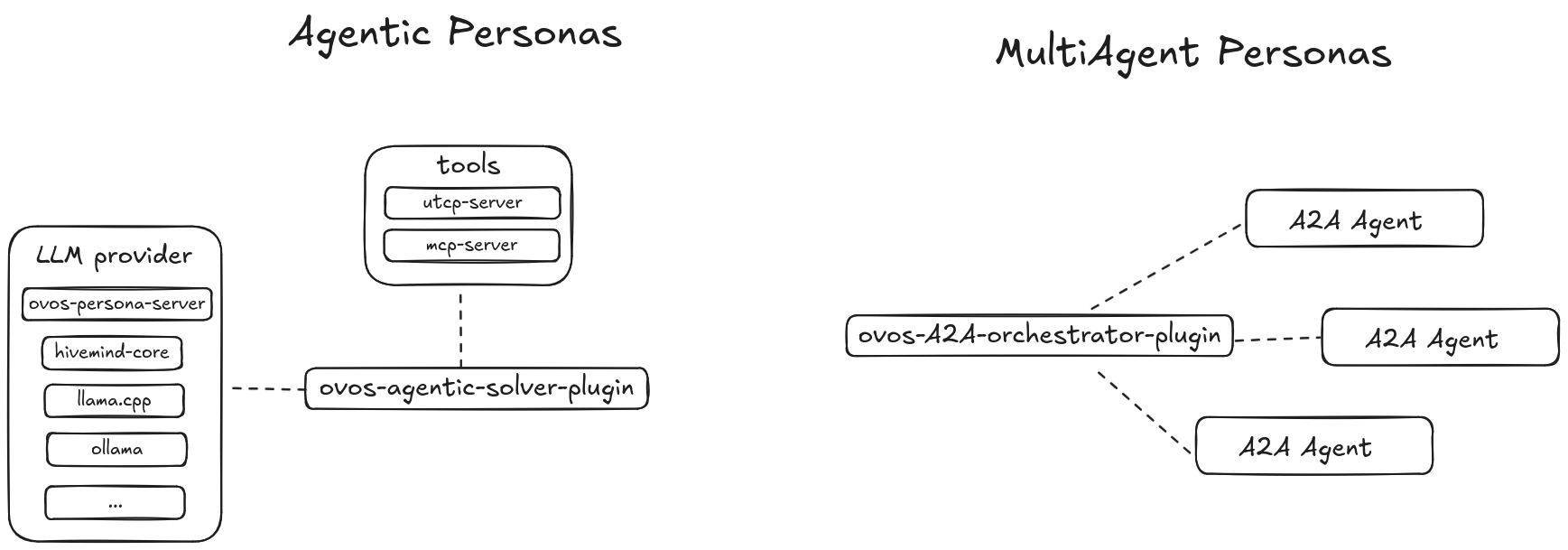

This is part of OVOS’s broader move toward multi-agent systems, exemplified by the ovos-persona-pipeline, which allows users to change the personality of OVOS on demand , effectively switching to an entirely new agent context.

These same personas will be the ones participating in MCP, UTCP, and A2A communication once these protocols land.

Model Context Protocol (MCP) and Universal Tool Calling Protocol (UTCP)

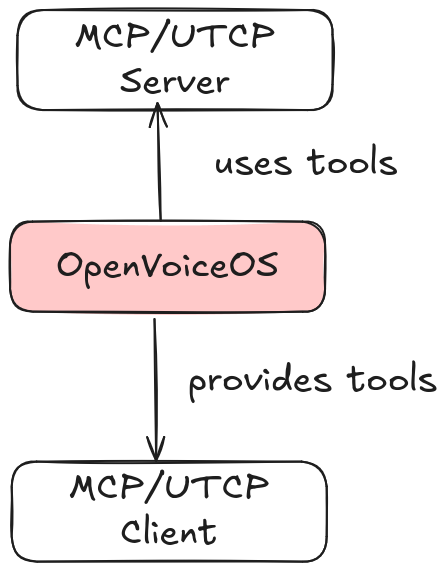

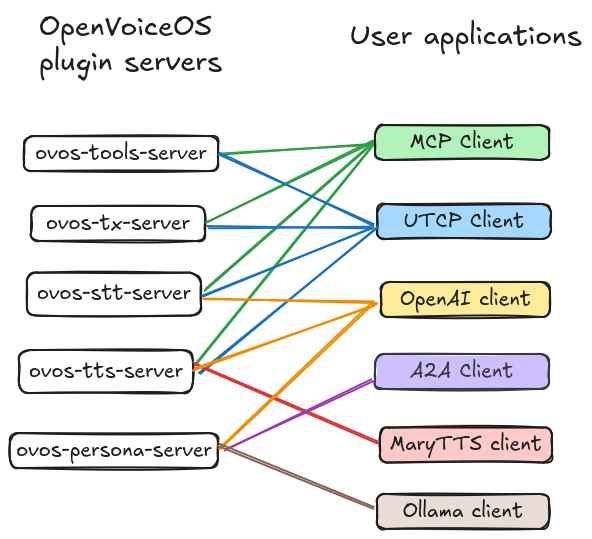

The Model Context Protocol (MCP) defines how agents and tools can exchange structured context and reasoning requests. In the near future, OVOS plans to both consume MCP-compatible tools and expose its own services (like STT, TTS, translation, and skills) over MCP.

This would allow external systems — including other assistants or orchestration layers — to treat OVOS capabilities as MCP tools.

In parallel, we’re also experimenting with Universal Tool Calling Protocol (UTCP). While MCP and UTCP have overlapping goals, they serve slightly different audiences. OVOS intends to support both, ensuring maximum compatibility and easy integration across ecosystems.

“We like UTCP but we love interoperability.”

Our goal is to make OVOS a universal interface layer, capable of understanding and serving requests in either protocol.

Agent-to-Agent Protocol (A2A)

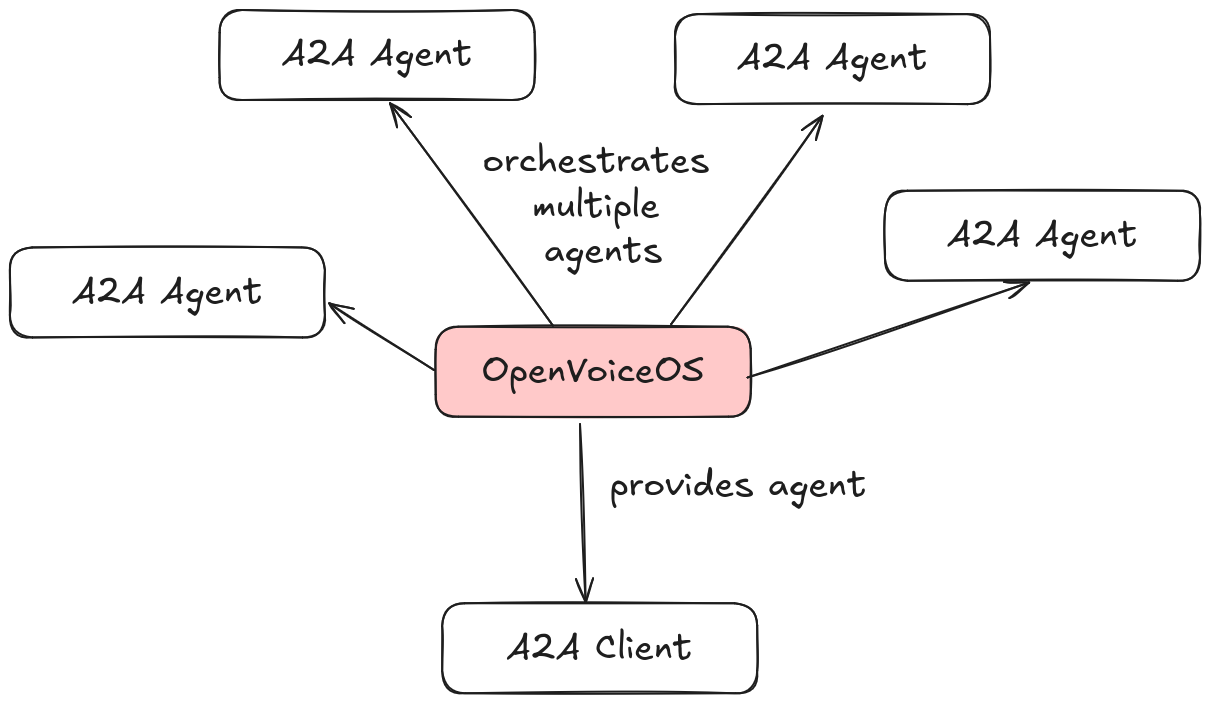

Finally, we’re integrating the Agent-to-Agent (A2A) protocol to allow multiple agents to discover, communicate, and collaborate dynamically.

This work is already underway in the ovos-persona-server, and will eventually power multi-agent orchestration where different personas or solver plugins can coordinate tasks collaboratively.

The OVOS Messagebus Protocol

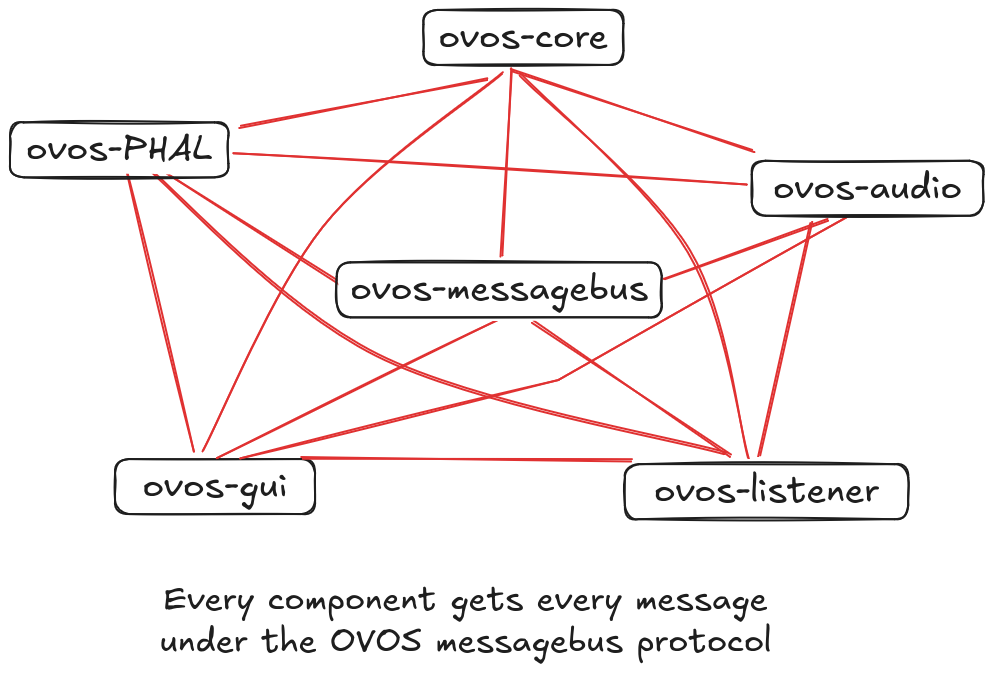

Under the hood, OVOS uses a websocket-based JSON messagebus to communicate internally. Historically, message formats were somewhat ad hoc, but that’s changing.

An index of Pydantic models is now being developed to describe all known OVOS message types forming what we call the OVOS Messagebus Protocol.

This documentation effort will make it easier for external tools, dashboards, or bridges (like HiveMind) to interact with OVOS safely and predictably.

HiveMind: A Transport Protocol for Federated Voice Networks

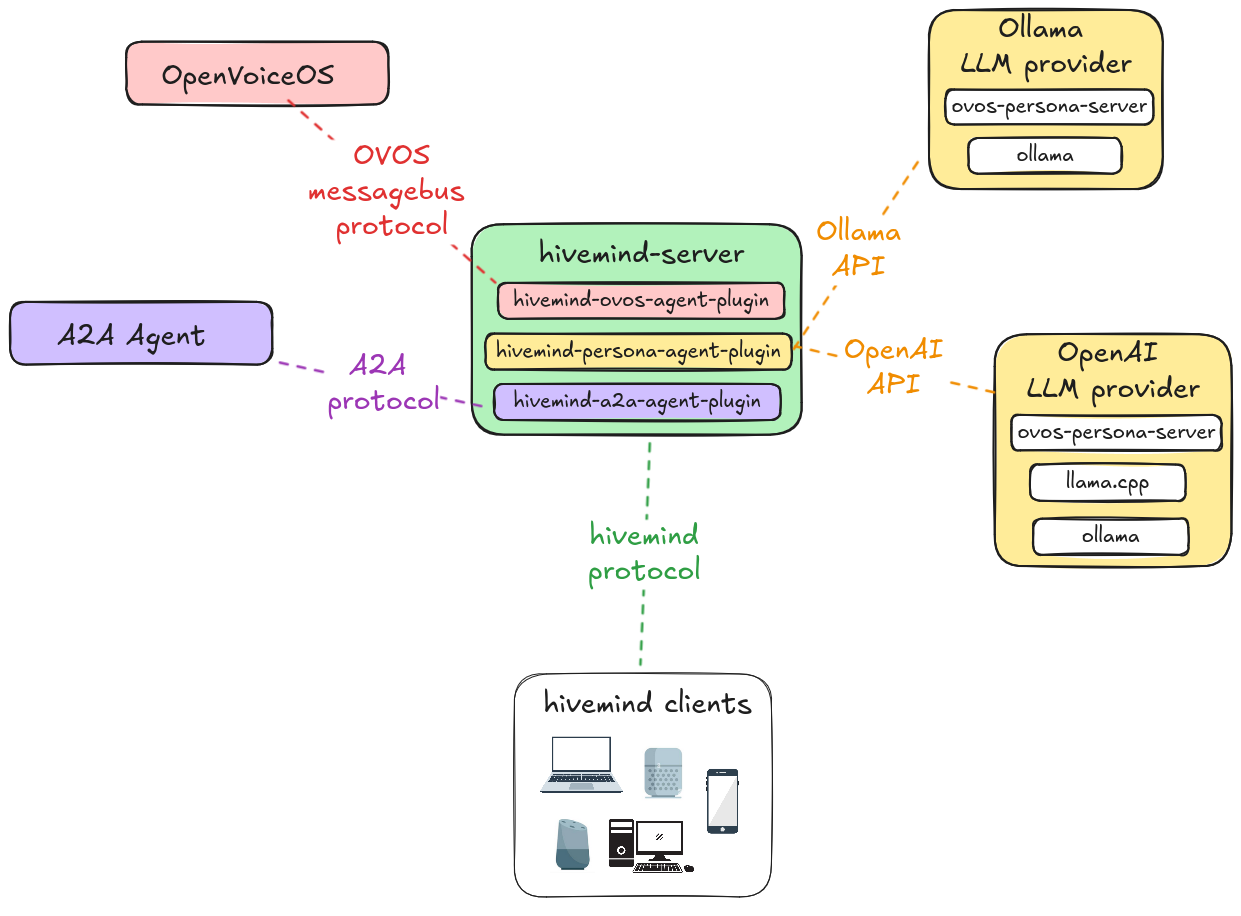

HiveMind is a hierarchical transport protocol, defining clear rules for how messages are routed and how nodes communicate across a distributed network.

This means OVOS can operate as just one participant within a much larger HiveMind network or power that network entirely.

While HiveMind was originally designed to support OVOS Messages, it’s agent-agnostic, capable of transporting any kind of message for any kind of AI agent. HiveMind achieves this flexibility through HiveMind agent plugins, which act as adapters between HiveMind and the agent logic itself.

The reference plugin uses OVOS as the agent, but any agent can be integrated, as long as the plugin can consume and emit OVOS messages, translating them to whatever the target agent requires.

This architecture enables a distributed ecosystem where:

- Different devices can run different agents, each with its own intelligence and capabilities.

- All HiveMind satellites remain compatible and interconnected.

- You can use the OVOS audio stack and plugin ecosystem (for STT, TTS, wake words, etc.) with any non-OVOS agent, simply by writing a small HiveMind agent plugin wrapper.

hivemind-a2a-agent-pluginwill allow connecting hivemind voice satellites to any A2A agent

With the ongoing effort to formalize the OVOS Messagebus Protocol, HiveMind will soon align even more closely, officially carrying the same message definitions inside, effectively becoming an implementation of the OVOS Messagebus over the HiveMind protocol.

OVOS Plugin Manager: Interoperability by Design

At the core of OVOS’s modularity is the OVOS Plugin Manager, which allows every single OVOS component to be swapped out dynamically.

In this context, the “protocol” is a shared base class with a well-defined API that each plugin implements. This ensures that all plugins, no matter where they’re deployed, expose the same interface to consumers.

This approach allows the same plugin to run anywhere:

- as a standalone HTTP microservice (e.g., STT, TTS, translation, or persona server),

- locally on an OVOS device,

- under a HiveMind satellite, or

- as a service under HiveMind server.

It can even be embedded into other projects that want immediate access to OVOS’s plugin ecosystem without importing the full OVOS stack.

Each plugin is effectively an interoperability layer across technologies: reusable, self-contained, and designed to connect to anything.

Use only the pieces you need without losing compatibility.

The Big Picture: “Connect Anything to OVOS, and OVOS to Anything”

All these efforts — MCP, UTCP, A2A, HiveMind, the Messagebus Protocol, the Plugin Manager, and even Wyoming adapters — share one goal: to make OVOS a universal connector in the voice and AI ecosystem.

Whether you’re using local models, cloud APIs, or other assistants, OVOS aims to act as the interoperability layer that ties them together.

The result? An assistant that doesn’t lock you in, it opens you up to an entire universe of tools, models, and agents.

Work in Progress

These initiatives are all part of ongoing research and development. MCP, UTCP, and A2A are planned but not yet implemented, while HiveMind, the Messagebus documentation, and standalone OVOS microservices are active and evolving.

The roadmap is ambitious but clear: make OVOS the most interoperable open-source assistant platform in existence.

With support from the NGI0 Commons Fund, we’re investing in open standards, transparent protocols, and bridges that connect communities across the open-source AI and voice landscape.

If you care about open standards, agentic AI, and the freedom to connect anything to anything, we’d love your input and contributions. OVOS is not just building an assistant, it’s building the protocols of open intelligence.

Help Us Build Voice for Everyone

OpenVoiceOS is more than software, it’s a mission. If you believe voice assistants should be open, inclusive, and user-controlled, here’s how you can help:

- 💸 Donate: Help us fund development, infrastructure, and legal protection.

- 📣 Contribute Open Data: Share voice samples and transcriptions under open licenses.

- 🌍 Translate: Help make OVOS accessible in every language.

We're not building this for profit. We're building it for people. With your support, we can keep voice tech transparent, private, and community-owned.